There was a day I decided to free trial Tidal Masters. For those unaware, Tidal is a streaming service, like Spotify. Its supposed advantage is that it can stream lossless music, thereby catering to discerning audiophiles (Masters is their highest tier).

If you were to acquire one of these Tidal lossless files, in FLAC format, you will find they play fine. Tidal, however, advertises that their lossless files can be enhanced even more, to a supposed "master" quality, if you get a special piece of software (and/or hardware—more on this later) that can decode proprietary data in the FLAC file. This decoding system is called MQA (Master Quality Audio).

Not feeling like getting a DAC or the software (Roon), which will just automatically decode the MQA on-the-fly while the FLAC files are playing, I wanted to see if I could try and decode the FLAC file into MQA. For absolutely no reason (as in, I can't really hear the difference either way), I ultimately decided to replicate the DAC/software's functionality of decoding on-the-fly. I am not very good at reverse-engineering (despite spending hours trying it), so I never reverse-engineered the MQA algorithms for this.

Instead, someone named Mans Rullgard discovered that a Bluesound DAC that supports MQA runs an embedded Linux (for ARMv7), and that embedded Linux has a library called libbluos_ssc.so which has all the MQA functionality. He then tried to reverse-engineer MQA using that library. The results of these results are located in this VideoLan GitLab repository here. This repository has zero documentation, but it's all I could find.

Decoding MQA Files

The mqa repository here seems to have a bunch of MQA identification files, but it also contains two crucial files, mqadec.c and mqarender.c. These files, if you are on an ARMv7 platform, will compile and call libbluos_ssc.so, and decode and render MQA files.

Note how I said that MQA could have both hardware and software components. MQA works in folds. The first fold can be done by either hardware or software, but the second and third folds can only be done in hardware. Each fold increases the sample rate of the audio by a factor of two. Consult the official MQA website's description as well. We call the first fold decoding, and the second/third fold rendering. Interestingly, both functions are implemented in libbluos_ssc.so. This means that MQA's "hardware component" is not dependent on special hardware; it could be implemented entirely in software (eg. Roon). It is not, however.

Some MQA files can only be unfolded once; the "hardware component" doesn't matter for that. For ones that need more than one unfold to reach maximum quality, this is when the "hardware component" matters. As to be guessed, mqadec does the first fold, and mqarender does the second/third fold (I don't think I ever got the third fold to work, though—there are barely any files that support that anyway). You can use another tool in the mqa repo, I think mqascan, to figure out how many folds a given MQA file supports.

For a file that requires two sets of unfolding, getting the output MQA file might look something like this:

mqadec InputFLACFile.flac 1FoldFile.flac

mqarender 1FoldFile.flac DoubleUnfoldedFile.flacOn top of this, you have to use mqascan to figure out the number of folds, so this is not very automatable. Another encountered issue is rendered MQA files are massive and thus unrealistic. Rendering MQA files also takes a very long time and is inefficient, which means that it is completely impractical to just decode these files if your goal is to play them back (if your goal is audio analysis, this is probably sufficient). My goal at this point now shifted to real-time playback.

Combining Decoding and Rendering

I wanted to first consolidate mqadec.c and mqarender.c into one file that did both at once. The end result is mqaplay.c.

mqadec.c is unwieldy and unintuitive, to the fault of libbluos_ssc.so. There is a function called ssc_decode_open that has two callback functions, one that gives the program a turn to read samples into a buffer, and one that lets the program write the decoded samples out. mqarender.c is much more intuitive, using a simple for loop and a function called ssc_render that takes input and writes into an output. The approach I took to combining these was to start with mqadec.c, and inside the write callback, to invoke ssc_render. You can preview this file (mqaplay.c) here. It is full of debugging and probably is of questionable code quality (forgetting to divide by 2 somewhere leads to a completely mangled audio file, as expected—and I did that too many times). This file also only works for audio files with two folds.

Real-Time Playback Using Pipes

Currently all execution happens in an ARMv7 environment. You can just use some audio API in an ARMv7 machine with audio output. I am too lazy to get this to work on my Raspberry Pi, so I ran all of mqa within an ArchLinux ARM chroot on my x86 machine, using qemu-user-static (you install the AUR package of that name, then download an arbitrary ArchLinux ARMv7 .tar.gz file, extract it, and then run sudo arch-chroot path-to-extracted-targz-file, and follow the keyring set-up steps).

To traverse the separated environments, I decided to invoke a compiled mqaplay using the qemu-arm-static (using the right LD_LIBRARY_PATH) but pipe the standard output to some audio player (eg. ffplay), since trying to use audio libraries within the chroot and expecting it to work on the host was too difficult for me to figure out. This solution involves changing the (libsndfile) output type from SF_FORMAT_WAV | SF_FORMAT_PCM_24 to just SF_FORMAT_PCM_24 because WAV audio cannot be piped due to some data being at the end of the file (or seeking), if I remember.

My mqaplay.c is suitable for real-time playback using the same WAV workaround. Using a compiled mqaplay.c, one can real-time playback a double-folded MQA file as such:

LD_LIBRARY_PATH=path/to/chroot/usr/lib qemu-arm-static path/to/mqaplay | ffplay -ar 192000 -ac 2 -f s24le -You must also copy path/to/chroot/lib/ld-linux-armhf.so.3 to the host's /lib/ld-linux-armhf.so.3 for the ffplay command to work.

Calling libbluos_ssc.so From an amd64 Binary

Note: this section is longer and more detailed since I spent the most time on this part.

Pipes are not a great solution. A better solution is if I could just magically call the libbluos_ssc.so file from an amd64 binary. I have no idea how to do that. It probably involves using qemu as a library (somehow), or some other ARM binary emulator library (like dynarmic). The latter is probably what I'm supposed to use (there even seems to be an Android library emulator). The way I chose to solve this problem is probably a terrible approach, and I have a lot to learn.

I settled multiple-binary approach, where some process running in the ARMv7 chroot uses an IPC mechanism (I wanted it to be fast, due to this being audio, so I chose shared memory [SHM]) to communicate with a library or binary in the x86 environment. This becomes the producer-consumer problem (I did not realize this is what I was implementing initially). If I want to play audio in real-time, the ARM process is the producer and the audio player is the consumer. My initial pseudocode to represent this case looked like this:

Producer:

int main() {

// We don't have to infinitely loop, but we do in example here

while (true) {

// shared_mem is a pointer to some piece of shared memory between

// the producer and consumer. We spin until the producer

// is finished.

while (shared_mem->producing) {}

// Play back audio buffer in SHM written to by producer

play_audio(shared_mem->rendered_audio_buffer);

read_into_buffer(audio_file, shared_mem->to_render_audio_buffer);

// Tell the ARM process to render the freshly-read audio data

shared_mem->producing = true;

}

}Consumer:

int main() {

// We don't have to infinitely loop, but we do in example here

while (true) {

// We spin until the consumer is finished.

while (!shared_mem->producing) {}

// Play back audio buffer in SHM written to by producer

render_audio(shared_mem->to_render_audio_buffer, shared_mem->rendered_audio_buffer);

// Tell the consumer to play the audio

shared_mem->producing = false;

}

}The actual code is more complicated, but this is the general paradigm of this sort of code. This code functions fine (and I implemented it without issue). There is one glaring issue, which is that instead of using a proper synchronization type like a conditional variable (lets you sleep the thread until a condition is met) or semaphore (lets you sleep the thread until a resource is available—at least how I like to think about it), I am spinning the CPU. Spinning the CPU causes energy to be wasted, the fans to ramp up/the computer becomes hot, etc. Spinning in this scenario is extremely inefficient and should be avoided.

Problems with Using the Standard Library Synchronization Types to Improve Performance

There is a nice standard conditional variable type called pthread_cond_t that works between processes. You also need a mutex to go with that, so there is pthread_mutex_t, and if you want to use a semaphore instead of a conditional variable to solve this problem, you can use a sem_t.

Trying to solve the producer-consumer problem by replacing the spins with a pthread_cond_wait in the while body and a pthread_mutex_lock before the while loop will not work in our case. When both binaries are on the same architecture, it will. I did not realize this for some time. The answer to why is simple. Consider this code (cc -o size_mismatch -lpthread code.c):

#include <pthread.h>

#include <stdio.h>

int main() {

printf("sizeof(pthread_mutex_t) is %d\n", sizeof(pthread_mutex_t));

}If you try this on an amd64 platform, the output is sizeof(pthread_mutex_t) is 40. If you try this on an ARMv7 platform, the output is sizeof(pthread_mutex_t) is 24. Thus, if a pthread mutex is mapped into shared memory from the amd64 side, when the ARMv7 library goes to address the mutex struct for whatever operation is happening, everything will be completely misaligned (I'm guessing because of different integer sizes by default—ARMv7 is 32-bit and amd64 is 64-bit), so you will encounter random errors. If you try to use a semaphore, the amd64 size is 32 and the ARMv7 size is 16 (again, guessing it is the default integer size). Only the conditional variable sizes are the same, and even then if you do not use a mutex with a conditional variable you will inevitably encounter deadlock (due to the the possibility of a missed wakeup from pthread_cond_wait without a mutex). So you cannot just use the standard library synchronization types to solve this problem.

Futexes

futex(2) is a syscall which requires one 32-bit integer (on all platforms) which is used to implement mutexes/conditional variables/semaphores without spinning the CPU. Futexes work by having the kernel sleep a thread using the FUTEX_WAIT operation, which is given given an address to sleep on and a check value. Futexes can also have the kernel wake the sleeping thread at a given address using FUTEX_WAKE.

Just for clarity, I will provide the function signatures which really encompass what FUTEX_WAIT and FUTEX_WAKE are.

// Given a uint32_t pointer, checks if the pointer points to val. If what the pointer points to equals val, then the thread sleeps. If it does not point to val, then the function returns.

long futex_wait(uint32_t* futex_word, uint32_t val);

// Given a uint32_t pointer, wakes up n threads sleeping at the given address.

long futex_wake(uint32_t* futex_word, uint32_t n);Also, futexes are tricky. Or so says the whitepaper "Futexes are tricky," which is required reading if you are going to use them to implement a mutex—it explains the implementation exceptionally. Once you understand the whitepaper, you can try and implement a mutex using its algorithm. When using futexes, to avoid race conditions (yes, there is a better explanation), you work with atomic operations. C has some compiler built-in atomic operations starting with __atomic that are unwieldy to work with, so it is recommendable to wrap those functions. The atomic operations also have a memory order, whose purpose I entirely and wholly do not understand.

Mutexes Do Not Wait

When the producer (music player) is waiting for the consumer to start, it has to wait. When you have a mutex implementation, you cannot wait unless the consumer has already acquired a lock. This is why mutexes are not entirely useful. Instead, you need a semaphore, which is better imagined as a candy box. The semaphore waits until there is candy inside the candy box. If the mutex were the candy box, the mutex waits until the candy box is empty. The candy box starts empty in either case. The mutex never waits.

Implementing a Semaphore

Semaphores are seemingly not as hard to implement. There are two functions, up, and down. Using the candy box analogy, down tries to grab candy from the candy box, but it cannot do that unless there is data to grab. up puts data into the candy box. Thus up simply increments the amount of candy in box, and if there were any waiters on the candy (the amount of candy before was 0), then we wake up the waiters using FUTEX_WAKE. down checks if the amount of candy is greater than 0, and if it is, it decreases the amount of candy by 1. If the candy is 0, it starts a FUTEX_WAIT that will be woken up when up is called. In the case of a binary semaphore (where the maximum amount of candy in the box is 1), you can use the __atomic_fetch_add function for the up and __atomic_compare_exchange_n for the down function.

Using the New Mutex and Semaphore

We return to our producer/consumer pseudocode and redo it with the custom synchronization types.

Producer:

int main() {

// We don't have to infinitely loop, but we do in example here

while (true) {

custom_sem_down(&shared_mem->full); // wait until the buffer is "full"

custom_mutex_lock(&shared_mem->data_lock);

play_audio(shared_mem->rendered_audio_buffer);

read_into_buffer(audio_file, shared_mem->to_render_audio_buffer);

// Tell the ARM process to render the freshly-read audio data

custom_mutex_unlock(&shared_mem->data_lock);

custom_sem_up(&shared_mem->empty); // tell the producer that the buffer is empty

}

}Consumer:

int main() {

// We don't have to infinitely loop, but we do in example here

while (true) {

custom_sem_down(&shared_mem->empty); // wait until the buffer is "empty"

custom_mutex_lock(&shared_mem->data_lock);

render_audio(shared_mem->to_render_audio_buffer, shared_mem->rendered_audio_buffer);

// Tell the consumer to play the audio

custom_mutex_unlock(&shared_mem->data_lock);

custom_sem_up(&shared_mem->full); // tell the consumer that the buffer is full

}

}If this pseudocode works, as I more-or-less implemented in this repo (instead of playing audio it just increments numbers in an array and prints them on the consumer side), then it is a guarantee that with some playing around, the same synchronization types will work in the scaled-up scenario of an MQA real-time player and improve performance by not spinning.

End Result of Custom Synchronization Types

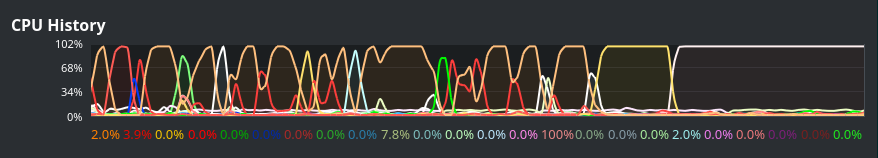

Before implementing futexes, this is the CPU usage of playing back an MQA file:

Dramatically lower after the new semaphore and mutex implementations.

Final Source Code

I have not decided whether I will be releasing the source code for this project (I am not distributing my changes to this project in source or binary form in the meantime, so I'm pretty sure I'm not violating the 3-Clause BSD license of the original mqa project), but if I do, I will update this blog post with a link to it.